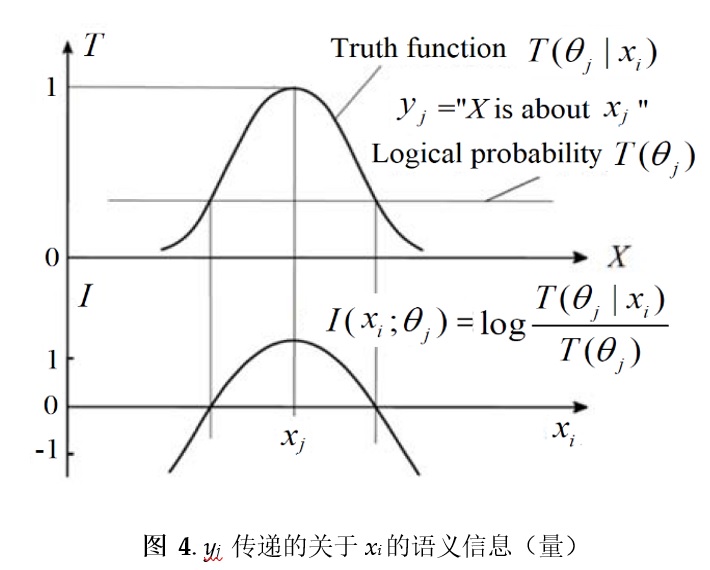

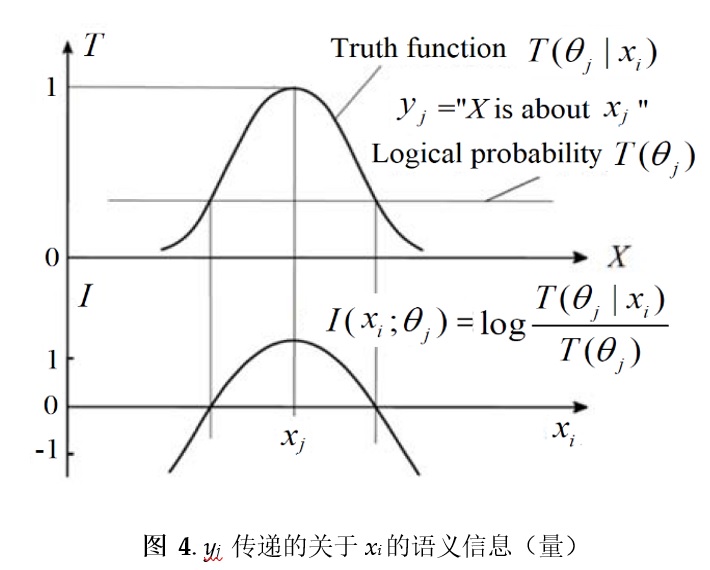

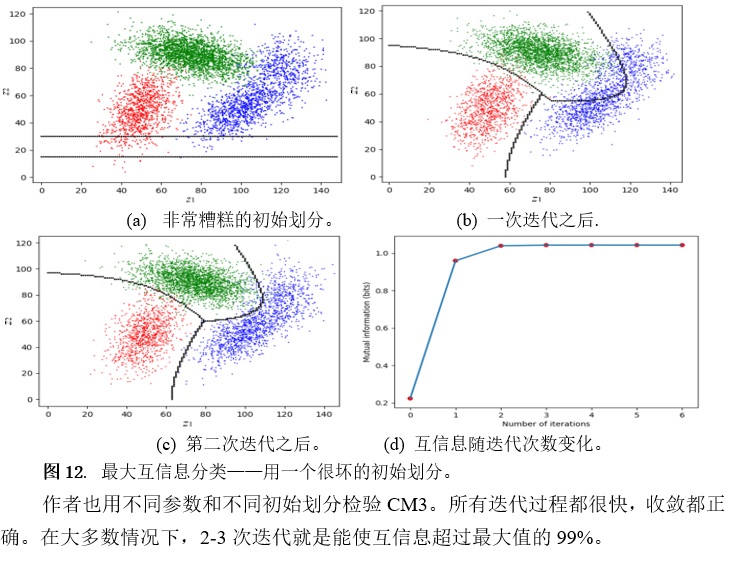

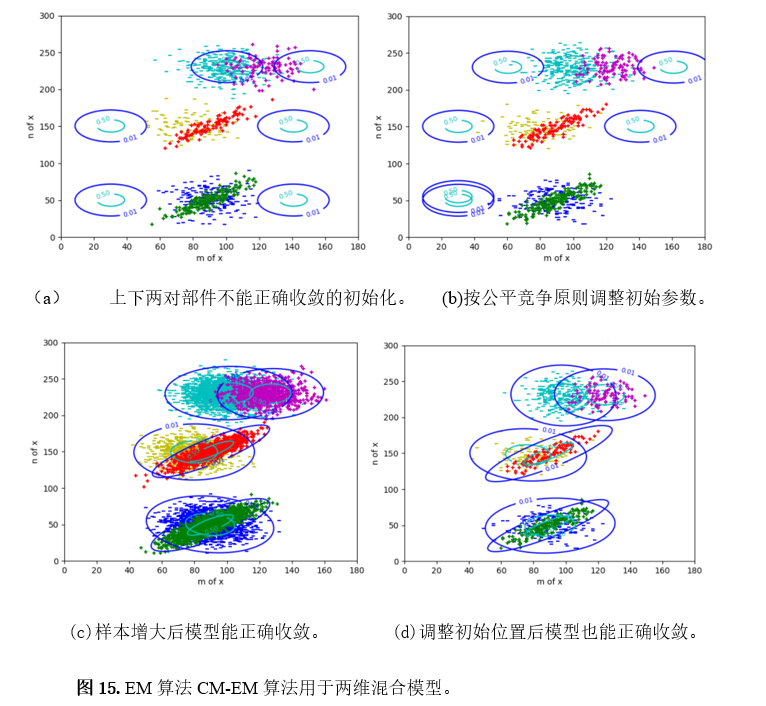

Abstract: Many researchers want to unify probability and logic by defining logical probability or probabilistic logic reasonably. This paper tries to unify statistics and logic so that we can use both statistical probability and logical probability at the same time. For this purpose, this paper proposes the P–T probability framework, which is assembled with Shannon’s statistical probability framework for communication, Kolmogorov’s probability axioms for logical probability, and Zadeh’s membership functions used as truth functions. Two kinds of probabilities are connected by an extended Bayes’ theorem, with which we can convert a likelihood function and a truth function from one to another. Hence, we can train truth functions (in logic) by sampling distributions (in statistics). This probability framework was developed in the author’s long-term studies on semantic information, statistical learning, and color vision. This paper first proposes the P–T probability framework and explains different probabilities in it by its applications to semantic information theory. Then, this framework and the semantic information methods are applied to statistical learning, statistical mechanics, hypothesis evaluation (including falsification), confirmation, and Bayesian reasoning. Theoretical applications illustrate the reasonability and practicability of this framework. This framework is helpful for interpretable AI. To interpret neural networks, we need further study.

Keywords:

semantic information theory; Bayesian inference; machine learning; multilabel classifications; maximum mutual information classifications; mixture models; confirmation measure; truth function.